Silicon Valley is wrestling with a Pentagon-shaped ethical question, and April 25 the arena for this particular fight was a wood-paneled ballroom at Stanford University, which hosted a listening session of the Defense Innovation Board. The Board exists to advise the secretary of defense on modern technology, and for nearly two hours it heard members of the public express their fears and hopes about applying robot brains to modern warfare.

Before the public weighed in, the tone of the hearing was set by Charles A. Allen, deputy general counsel for international affairs at the Department of Defense. Allen’s case was straightforward: As the Pentagon continues to justify the pursuit of AI for military applications to a sometimes-hostile technology sector and an ambivalent-at-best public, it will draw upon the language of precision and international humanitarian law.

“The United States has a long history of law of war compliance,” Allen said, after going over rules set out by then-Deputy Secretary of Defense Ash Carter in 2012 on how the department should acquire and field autonomous weapons. Most importantly for the listening session, Allen emphasized how those rules repeatedly require compliance with the laws of war, even when there aren’t specific laws written to address the kind of weapon used.

This structured the rest of Allen’s remarks, where he expanded on three ways to inform the ethical use of AI by the Department of Defense. First was applying international humanitarian law to the overall action the AI is support, such as using the rules for how attacks must be conducted when the AI assists in an attack. The second was emphasizing the principles of the laws of war, like military necessity, distinction, and proportionality (among others) still apply to AI.

“If the use of tech advances universal values, use of tech more ethical than refraining from such use,” Allen said, noting that this was an argument the United States made in Geneva in the Group of Governmental Experts working on the Convention for Certain Conventional Weapons. The talks to formulate new international standards for all nations on how to use autonomous systems at war are ongoing and have yet to reach consensus.

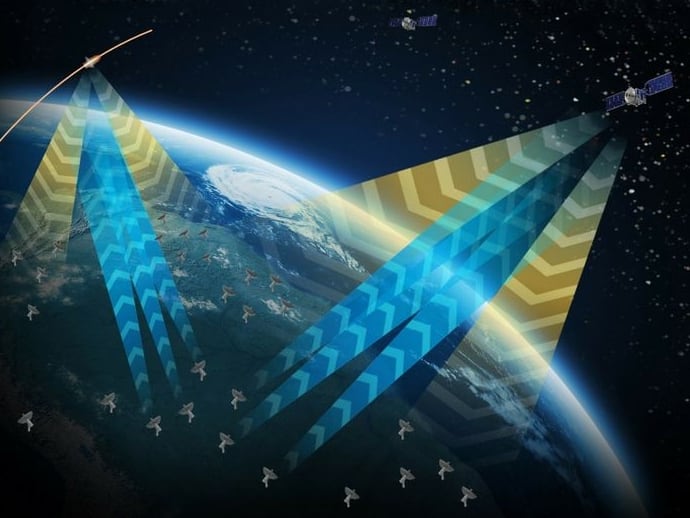

Allen’s third argument hinged on using the technology expressly to improve the implementation of civilian protection. After comparing the lifesaving potential of AI on the battlefield to the AI in self-driving cars (an analogy with some deep limitations given present-day technology), he went on to argue that AI used in war has an explicit life-saving mission, noting how weapon systems with automatic target recognition could target more accurately with less harm to civilians.

“By using AI to identify objects autonomously, analysts could search through more data, focus on more high-level analysis,” Allen said, specifically mentioning the beleaguered Project Maven. Developed by Google on top of open-source software, Project Maven was designed as a way to process military drone footage. After backlash from workers within the company, Google declined to renew the contract in March 2019, and it is now led by Anduril Industries.

Allen used Project Maven as an example of AI at the convergence of military and humanitarian interests, a way for increased accuracy in use of force, and for use by others in high-stakes situations where accuracy and urgency of information is paramount. Besides explicit military use, Allen cited the use of Project Maven by the Joint Artificial Intelligence Center in adapting Maven to a humanitarian initiative, “helping first responders identify objects in wildfires and hurricanes.”

After his remarks, Allen faced pushback from speakers almost immediately.

“International law also requires individual human responsibility, and with this new class of weapons there’s been created an accountability gap where it’s unclear who would be held accountable, whether it’s the programmer, the machine itself, or the commander," said Marta Kosmyna, an organizer from the Silicon Valley chapter of the Campaign to Stop Killer Robots. “Is the commander willing and able to understand how the system works, and how an algorithm reaches its conclusions?

“We often hear the argument that full autonomous weapons would be more accurate, fewer weapons would be fired, and it would save lives,” said Kosmyna. “We believe that these things can be achieved with semi-autonomous systems.”

Bow Rodgers, a Vietnam veteran and CEO of Vet-Tech accelerator, noted that “friendly fire” isn’t, and mentioned the difficulty in distinguishing between targets in combat. He expressed his wish that AI be applied to difficult military uses.

The speed of AI and of battle were cited as desirable reasons the military might want to pursue autonomous weapons, as was a deep concern that other nations might pursue AI weapons without the same ethical constraints as the United States. While there’s sparse polling on American attitudes towards autonomous weapons, what existing polls suggest is that that people are more in favor of the United States developing AI weapons if it is known that other countries are developing the same.

“We are in a period of irregular warfare, where that question of who actually constitutes a combatant, who constitutes an imminent threat is more problematic for humans to address than it has ever been before,” said Lucy Suchman, a professor of anthropology of science and technology at Lancaster University. She asked the board to note the distinction between an AI-enabled weapon more precisely firing a bullet at a person and the AI correctly identifying in the person it decides to shoot is a lawful combatant and the intended target.

As decades of experience with precision weapons have shown, the accuracy of the weapon once fired is a distinct question from the information that went into choosing whom to fire upon. (This is to say nothing of the problems found in AI recognition of people in the civilian sector, including quite possibly intractable category errors that are inherent to the programming.)

“A key problem of ethics in AI is going to be making sure they adhere by those principles in particular cases with particular systems, especially when they’re deployed in particular environments” said Amanda Askell of the group Open AI. “An example might be, you deploy a particular navigation system that is going to move vehicles into foreign territory if the details are unlike details it has processed before. That could be particularly disastrous system to deploy, and if you just have no checks or imperfect checks, you could create unintentional conflict by not knowing the system in question.”

Would another nation view such an incursion as a direct military threat or an instance of computer error? Askell said that rather than relying on those imperfect checks, people making policy should work closely with the people making the technology to intimately understand the systems and how they might fail.

“There is no such thing as just introducing new technology,” said Mira Lane, head of ethics and society at Microsoft. After outlining the limitations of programmers to anticipate all that their code will produce, and the ways in which wartime necessity might alter the design of autonomous weapon systems, Lane emphasized the importance of developing tight iteration loops and feedback systems in AI to spot flaws early and allow for rapid mitigation and adaptation.

“With every new development of technology, the emergence of new domains of ignorance is inevitable and predictable,” said Lane.

Kelsey Atherton blogs about military technology for C4ISRNET, Fifth Domain, Defense News, and Military Times. He previously wrote for Popular Science, and also created, solicited, and edited content for a group blog on political science fiction and international security.