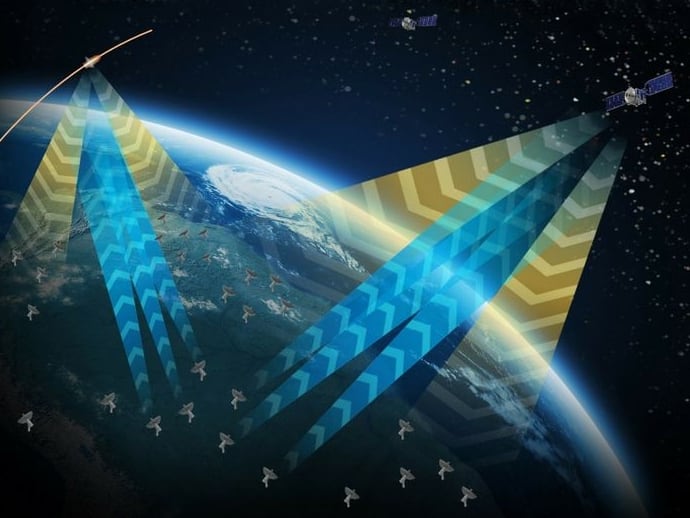

War fighters depend on the GPS satellite signal to know where they are and where they’re going. But how do they know where they are when they’re in a GPS-denied environment?

The Department of Defense interest in alternative positioning, navigation and timing solutions, including those that can verify or replace GPS, has been growing in recent years.

“There’s certainly a lot of people looking into the problem of assured PNT,” Col. Nickolas Kioutas, the Army’s project manager for PNT, told C4ISRNET at the the 2019 meeting of the Association of the U.S. Army Oct. 15. Kioutas works out of the Program Executive Officer for the Intelligence, Electronic Warfare and Sensors. “I really like some of the software approaches that I’ve seen because I think that we’ve really been focused on hardware, but what are some of the algorithms that we can look at to really exploit the sensors that we already have?”

Leidos, a contractor for the Department of Defense and intelligence community, is one company working on one solution that might fit the bill, which they call the Assured Data Engine for Positioning and Timing (ADEPT). The Defense Department is incorporating into the RQ-7 Shadow and the MQ-1C Gray Eagle for the Army and the MQ-9 Reaper for the Air Force.

At AUSA, Leidos representatives described how the system works on a drone, as well as how they’re trying to push the system to work with dismounted war fighters.

“In a nutshell, you’ve got a sensor on the bottom of your aircraft, and it can either be your ISR sensor ball that’s looking around, looking for bad guys or whatever, or it can be a dedicated camera looking down, and it’s taking an image,” explained Scott Sexton, a robotics navigation engineer at Leidos.

“We run it through an image-processing algorithm that pulls out key features from the image and it’s all automated, and so the algorithm knows what’s interesting and what’s not,” he added. “That creates a unique thumbprint.”

The system then takes that thumbprint and scans geotagged satellite imagery to find a match. If a match is found, the Leidos system can then use the direction the aircraft is headed and the angle of the sensors to triangulate the aircraft’s position.

Representatives note that the technology includes the error in the position data it provides so operators know how accurate the data they’re being fed is, whether it’s accurate to within 10 meters or 100 meters.

Experts envision the system as remaining on during aircraft operations, working to verify or augment GPS data.

“If you have GPS you’re just going to keep using that [...] because it’s the best sort of information we have,” said Sexton. “As soon as it’s deviated by 500 meters, well, let’s start trusting [ADEPT].”

But Leidos officials are touting that the system can also serve as a check on GPS information.

“If someone is, say, spoofing you, the two solutions are going to start to diverge. So, if our solution coming out of ADEPT is telling you one thing to a high certainty, then that’s a pretty good clue that your GPS is inaccurate,” said Scott Pollard, vice president and business area manager. “It’s pretty hard to spoof this. You’d have to literally rearrange the landscape to fool this or blind the sensor.”

ADEPT can also geotag objects in midflight, giving the aircraft a frame of reference to use in navigation in places where GPS is denied and no mapping match is found.

“We’re doing street-level image matching,” said Troy Mitchell, Leidos navigation program manager. “What we’re doing is we’re taking satellite images and what they’ve done is they’ve made 3D point clouds of buildings and landscapes and stuff like that. We’re taking that as a reference.”

Essentially, the program takes that 3D point cloud to generate what a street level view would look like. Then by taking a street-level sensor, say a camera attached to a helmet or uniform, the system can match actual imagery with what it expects a street level view to look like in order to find they’re location.

“The holy grail would be, can you drop yourself somewhere in the world in a random location and just open your eyes, look out at the landscape and say, ‘I’m here,’” said Pollard. “That’s a tough problem."

Nathan Strout covers space, unmanned and intelligence systems for C4ISRNET.