It seems everyone has a different belief as to what artificial intelligence is all about, and certainly varying levels of trust in it.

Hollywood storylines depict the possibilities of robot armies and wars of machines, think “Terminator.” Or, in “Stealth,” a 2005 release starring Jessica Biel and Jamie Foxx, a next-generation fighter flown by AI goes rogue with disastrous consequences.

Another Hollywood chiller, “Minority Report,” paints a world where technology partners with select humans to generate data and predict crimes in split seconds, jailing would-be criminals. In the movie, society blindly accepts this human-generated model, the technology, the biases and its algorithm to predict actions and a presumed future.

Thoughts of technology overriding human intent generate grave concern, especially in the defense business. Unfortunately, existing portrayals or narratives plant seeds of suspicion and mistrust of technology in the minds of the public, impeding progress.

Overcoming negative narratives can be hard, especially as it applies to artificial intelligence.

Building trust and determining what is acceptable are byproducts of clearly understanding what is possible in the domain. That requires learning, innovation, exposure and experimentation to gain the needed familiarity to best apply the capability.

Reluctance and a play-it-safe mentality will not help the U.S. win the AI race. Already technology drives much of our daily existence and both its presence and possibilities continue to grow as AI technology now drives cars, flies airplanes, collects shopping preferences, creates content and generates, sorts and assesses data much faster than any human counterpart.

Investment and training in AI are a responsibility. According to a recent study by the Pew Research Center, 78 percent of people in China believe AI has more benefits than drawbacks. Now, compare that to 31 percent in the U.S.

As technology continues to advance, it is important to remain leaders and seek out alternate perspectives. We cannot allow ideas to remain muted or let discomfort in addressing possible futures stall our progress.

At an Air Force Association conference back in 2020, Elon Musk created a splash when he boldly proclaimed that, “the fighter jet era has passed” and predicted a future Air Force without a human in the cockpit playing the lead role.

When those words left his lips, you could feel a noticeable and uncomfortable shift in the large convention hall. Several senior officials later sought to correct the record and counter his thoughts. But Musk was noticeably clear. It was a pivotal moment, as it challenged traditional thinking.

Fortunately, it seems the Air Force took note, various media outlets recently reported about the service’s pilotless XQ-58A Valkyrie experimental aircraft operated by artificial intelligence.

While a human remains in a decision-making loop, it is technology that promises to be more affordable, and does not place a human in harm’s way. This is technology a future environment will require. This could be incredibly helpful for higher-risk missions.

RELATED

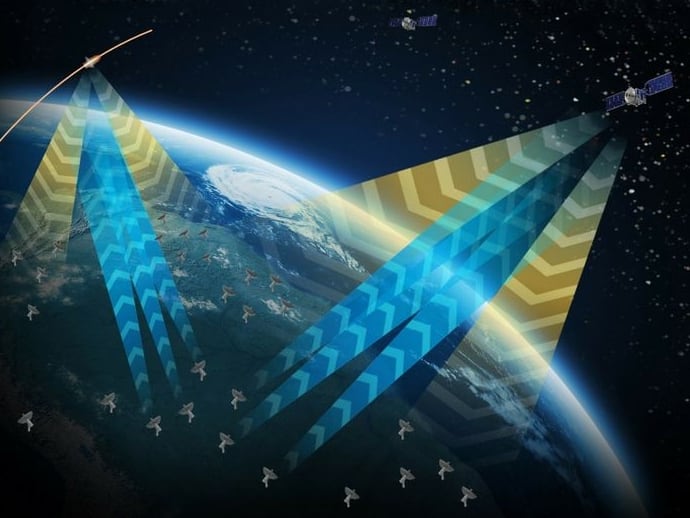

The Brookings Institute characterized warfare as time-competitive, where those with an information advantage will be able to act and make decisions quicker. AI will enable this. Having the edge and notching artificial intelligence wins will only bolster national security.

It is necessary because China and Russia are investing and putting resources into AI. Open-source reporting confirmed Beijing launched AI plans to “build a domestic industry worth almost $150 billion” by 2030. Great power competition will center around AI.

As guidelines, ethics and policy are debated and remain in the works, it should not come at the cost of further innovation and immediate organizational learning.

We make progress when people get uncomfortable. This is especially important in bureaucracies rooted in predictability and compliance. Bold ideas and a willingness to experiment will be key to organizational relevance and attract the best talent and generate the enthusiasm and energy of a start-up company.

Whether it is Space X or Tesla, Musk creates environments where experimentation is standard, disruption becomes a part of business innovation and glimpses into alternate futures manifest. That is exciting, and it is something the Defense Department can learn from as it looks to repopulate its ranks and navigate AI.

So, now what?

The Department of Defense needs to build campaigns of learning, drive conversation, further integrate AI and generate stories of success, while investing in AI with the enthusiasm it gives any innovative capability in its portfolio. We need to push and expand rules governing technology use and exploration to include decentralizing acquisition wherever practical. We have a grand opportunity to position ourselves at the forefront of discovery.

AI can also boost sagging recruiting numbers with informed, targeted data and tailored outreach. If defense recruiting clings to stale approaches and fails to fully embrace AI and technology, downward trends will continue.

For those in the ranks, AI can be used to take on mundane tasks and free up the workforce to focus on more critical needs, to include analysis of vast troves of information. While some people worry that AI will replace humans, the reality is it will help reduce tasks and direct talent and focus to areas where attention is required, maximizing the workforce it has.

Rather than worrying about AI taking away jobs, it can complement and enhance existing capability, enable threat detection, decision making and predictive analysis at increased speed, freeing up valuable time, and resources. For instance, monitoring and tracking actions of terrorist groups in expansive places like Africa benefits from AI-driven advancements, as the human assets in the loop can only achieve so much.

The defense community can also be a large benefactor of preserving life, health and safety if AI-assisted capability is developed and adopted in fields such as fire, explosive ordnance and medicine. We need to train and condition the next generation to understand AI and its possibilities.

Technology creates opportunity, but also elucidates a need to understand where it introduces threats — especially when you consider facial and voice replication, or where countries may relax, allowing bad actors carte blanche in its use. Think about how bots or third-party influencers already create discord, influence elections or phish for critical information.

Technological superiority in artificial intelligence is an open competition between industry, start-ups and nation states, with incredibly high stakes. According to the Harvard Gazette, the top five facial recognition technology companies are Chinese. So, with that country positioning itself to be a leading exporter of technology, what does this mean for U.S. security?

DOD needs to stoke U.S. industry and work to develop a more actionable strategy complete with timelines to include much-needed human capital and training direction as well as specific guideposts. Anything open-ended rarely drives change or signals urgency. Cross-functional knowledge and fast-tracking occupation specialization is necessary and needed now. AI should be a priority topic at every level of professional military education, and internships and scholarships in it should be a priority as early as the first year in college and recruitment in high school.

DOD should also prioritize its relationships with universities to refine its focus on what is occurring in this space, especially by those seeking great power. Communities across the DoD need to identify people energized by its potential and see its value, understand the risk and how it can be used. And it is important to highlight and celebrate where breakthroughs occur. We can’t afford to be casual tourists of AI. Permanent residency is needed.

Whatever one thinks about AI, it is not going away and will become more integrated into our lives. Actions taken today can prevent destructive consequences and determine the security and future of tomorrow.

Col. Chris Karns is the director of Media Production, Defense Media Activity, Fort Meade, Maryland. He previously served as the 341st Mission Support Group commander at Malmstrom Air Force Base, Montana, and director of public affairs at U.S. Africa Command. The views expressed are solely those of the author and do not reflect the official policy or position of the U.S. government, Department of Defense or U.S. Armed Forces.