The United States is playing a game of cat and mouse with adversaries all across the globe.

The U.S. Northern Command is maintaining broad situational awareness so it can deter, detect and defeat threats to the homeland. The U.S. Indo-Pacific Command is posturing the Joint Force to fight and win against regional threats, if required. The U.S. European Command supports NATO in deterring Russia and fortifying Euro-Atlantic Security. The U.S. Central Command enables military operations to increase regional security and stability.

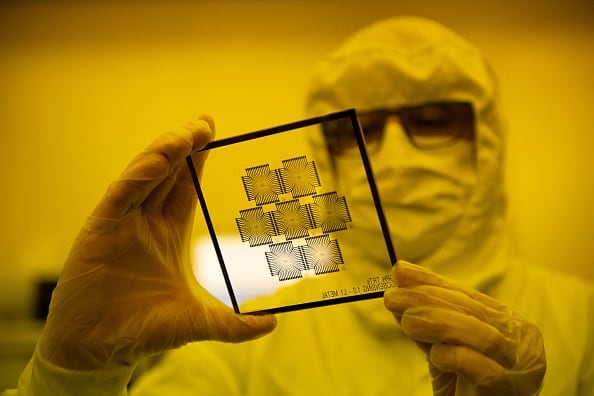

All of these Geographic Combatant Commands are using data-driven technology including machine learning and artificial intelligence to act faster than those attempting to undermine U.S. interest and those of its partners. One specific example at scale is the U.S. Navy’s Task Force-59, which is striving to leverage autonomy and advanced analytics to prevent the Iranian Revolutionary Guard from funding terrorism through the smuggling of oil in the Arabian Gulf against international sanctions. This monumental task is only possible with AI, deployed through a growing constellation of commercially available sensors and autonomous vessels.

Two factors have consistently impeded the adoption of AI in the U.S. defense and intelligence communities. The first is the slow pace of an emerging reliable pipeline that can guide machine learning models from development to deployment/persistent redeployment. The second problem, even more fundamental, is a lack of trust in AI systems.

After all, relying on these algorithmic-driven systems comes with risk. For example, on the Korean Peninsula or other areas of heightened tensions, a computer vision system monitoring for enemy equipment or positions can be deceived by leveraging adversarial patches. This can cause a lack of recognition by the ML model.

Put simply, an enemy headquarters, vessel/equipment, or critical infrastructure can be rendered invisible or—in the worst case, even with human review and decision-making in the targeting process — as an erroneous detection by a technologically savvy adversary that causes the loss of non-combatant life or critical infrastructure. These algorithmic vulnerabilities only exacerbate public distrust, delaying the adoption of needed AI technology — even as adversaries like China invest widespread resources in its development.

The U.S. cannot assume any advantage in the AI arms race. To keep up with pacing threats including China, Washington must both accelerate the innovation pipeline and build end-user confidence in AI, through robust testing and validation offered by the private sector. Failure to do so would put the U.S. at a severe disadvantage.

The elements of AI acceleration

The future is already upon us. The advent of AI and other disruptive technologies — including autonomy and augmented, virtual, and mixed reality — is creating a new era, where nations can and will make informed decisions faster than ever before. Data-driven operations have the ability to rapidly accelerate time-to-decision, shifting the balance of global power on a dime. This does not just change the nature of existing threats — new, previously unimagined threats are emerging every day from familiar and new threat actors.

In order to accelerate AI innovation, the U.S. needs to invest in the development and retention of an AI-focused and literate workforce. The focus should not be merely on innovation for innovation’s sake. Rather, we need to cultivate a widespread understanding of ML at the highest echelons of government, with full awareness of its possibilities, risks, and, most importantly, why it is so critical that it be embraced quickly. Right now, many decision-makers might still be hesitant, seeing only the unknowns around AI, the headlines falsely touting the rise of sentient AI, or the idea that AI will negatively impact the American workforce.

The proper response to this skepticism is to focus on how AI empowers humans to work faster and make more informed decisions. This is absolutely critical in the defense and intelligence communities, where speed is the ultimate end goal of deploying ML models. With widespread education on these technologies, from the battlespace to the boardroom, we can not only assuage these fears but also show the power AI can have—and why it’s essential that the U.S. speed up its adoption.

While education is key, the next step is creating a reliable, repeatable pipeline that shepherds ML models from development to deployment, with a focus on persistent robust testing and validation. At the core of this concept is a focus on trust. In a future defined by algorithmic warfare, government actors must understand that our models will perform effectively and responsibly no matter the time and place they are called upon.

The commercial sector must help us develop world-class independent model testing, performance monitoring, and hardening capabilities as we increasingly use algorithmic decision-making in high-consequence situations. Through processes such as these, the U.S. will be able to deploy models to empower military decision makers in critical theaters like the South China Sea with confidence.

The time is now

If adversaries create this innovation pipeline first, their technology adoption rate will continue to accelerate. The U.S. must reform its own processes to expedite this technology to the field, but it must do so in a way that creates trust among decision-makers, warfighters, and everyone in between.

This goal must be pursued with end-user confidence in mind. That confidence will drive increased deployments, which will lead to the ultimate goal of continued dominance by the U.S. and its allies in the race to remain the global leaders.

To create this new, efficient process, the government must invest in the technology and processes being developed by the top engineers and data scientists in the country. Time is of the essence, and these minds are standing by to solve the government’s most pressing data problems.

The rest of the world is also working to find these solutions and create a confident AI-driven strategy. Whoever opens the floodgates first — responsibly and with confidence — may well run out ahead of the pack.

Neil Serebryany is CEO of CalypsoAI. David Spirk served as the DoD’s first Chief Data Officer and is Special Advisor to the CEO of CalypsoAI, Senior Counselor at Palantir Technologies, and Senior Advisor at Pallas Advisors.