Imagine an analyst tasked with watching hours of video footage or poring over thousands of images searching for a particular object. What if a machine could process this data for the analyst in seconds, freeing the analyst to perform more critical thinking rather than searching databases?

Advancements in automation, machine learning and artificial intelligence are increasingly serving this purpose of “unburdening” analysts. With greater interest and adoption of these technologies into operations, there is more clarity of how they will work.

Leveraging these tools will allow staffers to get back to performing analysis rather than poring over the data itself. But in interviews with industry representatives, what’s becoming clearer is exactly how intelligence agencies can use these new technologies. In short: machines will pre-sort or manipulate data and then present its findings to a human for additional analysis, weeding out hours of manual labor.

Critical to this development is understanding the difference between artificial intelligence, automation and machine learning.

“I consider AI to be cognition, independent cognition. A true AI would be cognizant of itself and be able to create and create from nothing,” Rob Shaughnessy, senior manager at Deloitte, told C4ISRNET at the annual GEOINT symposium in Tampa, Florida. He noted that industry hasn’t seen this characteristic yet. With machine learning, “it can teach itself, but it’s teaching itself within a tightly defined lane … It’s being given boundaries to work in and learning within those boundaries.”

Shaughnessy added that machine learning is great for getting a machine to provide curated intelligence information to an analyst. It’s not so great at making decisions. AI, on the other hand, will make an absolute decision or the best decision.

However, “you don’t always want to make the best decision. There could be political reasons to not make what is the best logical decision. That’s for humans to do and that will always be for humans to do,” he said.

Some described the act of sifting through data to get to a decision as layered.

“We look at analytics as, after you have the data, what’s the intelligence you can get out of that data? Most of those analytics is how, with software, you can automatically produce some of these insights,” Jane Chappell, vice president of global intelligence solutions at Raytheon, told C4ISRNET at the same conference. “Machine learning, then, is just after you’ve done that and seen, ‘hey six times in a row when this happens, then that happens.’ That’s really the machine learning effort.

“Then AI ― which really in this industry we’re really not at an AI standpoint yet ― that’s just the code thinking on its own. We’re really not to the point right now. We’re [not] applying AI at any kind of scale in industry.”

After all, content or data is just that until it is turned into intelligence.

What can machine learning do for analysts?

“You can collect picture of ‘x’ for forever but if you’re not making sense out of any of it, you just have pictures of ‘x’ and that doesn’t help anybody,” Mike Manzo, director of intelligence, threat and analytic solutions at General Dynamics told C4ISRNET at GEOINT. “It’s content until we make sense of it. That sense could be made with a machine or that sense could be made with an analyst. Where it’s heading is it’s going to be made with both. Where the machine is really going through the monotony part and the analyst is really providing that values added piece and that’s really where things are going.”

Officials within the U.S. government have stressed that these new processes are not designed to eliminate humans, but to make them better at their job. And it’s imperative to leverage emerging technology to help make sense of the deluge of data in order to drive insights.

“Automated intelligence using machines, I call it that way because I’m not interested in the technology artificial intelligence, I’m interested in the outcomes we achieve,” Principal Deputy Director of National Intelligence Sue Gordon, said during a keynote presentation at the show.

Gordon praised the National Geospatial Intelligence Agency and its director for its aggressive adoption of these technologies. During the conference, NGA Director Robert Cardillo announced a robust goal of applying automation, artificial intelligence and augmentation to every image NGA ingests by the end of this year.

In its most simple form, leaders want these technologies to sift through the data and alert analysts of something significant.

“Specific to a customer, like here at GEOINT and NGA, analysts are poring through mounds and mounds of data. We need to get the analyst focused on the analysis. Looking at what’s important to determine what the best courses of actions are whether they’re national security or policy or the warfighter,” Damian DiPippa, senior vice president/general manager mission and intelligence solutions business unit at ManTech told C4ISRNET.

Manzo explained that the key is finding pinpoints from an analyst perspective having systems automated in the background to enable them to get to what they want to do, which is their job.

“What we’re hearing from them is I want to be an analyst, I don’t want to be a data gatherer, I don’t want to be a discoverer,” he said.

Manzo continued; “I want to know my data is pre-populated for me or is pre-stationed for me so when I get in in the morning I’m not spending two-thirds of my day hunting for the things I know I need to do my job … I found all my data, so that’s two-thirds of my day, now I have one-third of my day just to go through it all and by the time my day’s finished I haven’t actually gotten that far because I’ve spent most of it looking for and now discovering.”

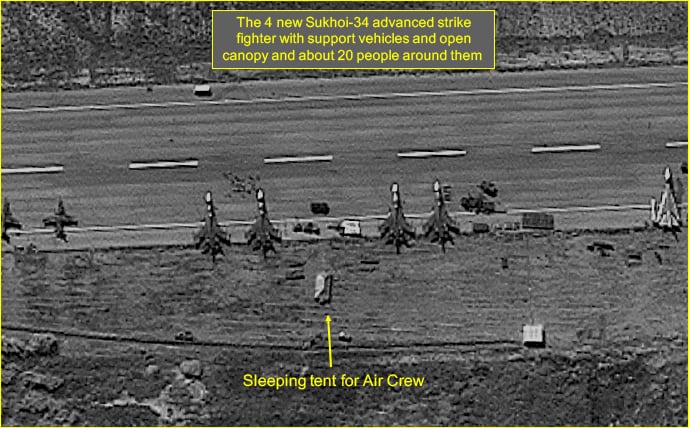

Manzo described how a human, working through a machine, might work in practice through the lens of an analyst tasked with monitoring North Korean missile launches. The analyst knows in order for a launch to occur a clear space has to be found, a transporter erector launcher has to be present along with fuel trucks and maybe even people that aren’t normally in a particular area.

The machine can look at imagery data and when certain thresholds are met, alert the human. The human analyst can then take control back after receiving an alert, and using their critical thinking and expertise determining if in fact what is being observed is preparation for a launch or simply a training exercise.

Deloitte, similarly, displayed a machine learning capability that provided a great deal of insights from a video in a matter of seconds. The system was able to provide frame by frame analysis, object detection of objects in the video as well as concept detections such as power or time. The system can then provide timestamps of when these items happened in the video as well as a full timestamped transcript saving the analyst the time of watching the full video.

Additionally, Deloitte demoed what Shaughnessy described as one of the simplest examples to look at but one of the hardest things to do from a machine learning perspective. The machine was able to learn what an M1 Abrahams tank was based on a video of an Abrams spinning around. Moreover, the machine was able to begin to pick out unique identifiers of the tank, such as its tracks, and identify a different variant of the vehicle.

By having the machine identify tanks in pictures or full motion video, Shaughnessy said this makes a human’s job not just easier, but it changes it because the analysts can ask more complex and better questions.

Unburdening the analyst

A more startling example of problems technology can solve for analysts is observing behavior at a fixed location. Sometimes, analysts have to stare at a particular building or compound monitoring every vehicle and person that enters and track where they go when they leave, Josh Nauman, chief engineer at Harris Geospatial Solutions, told C4ISRNET. While the analyst is following a vehicle out of the compound, they have no idea what else is going in and out of that building, so then they have to go back to where they started and watch it again.

Harris is providing solutions that allow for the machine to monitor full motion video and imagery feeds to stare at the compound and track vehicles and persons going in and out alerting the analyst of all the instances. The system can also identify:

- Similarities, where there’s a known route of interest and the machine will alert the analyst any time a vehicle hits that route;

- Multiarea association, which monitors multiple locations at once and provides notifications anytime there is track activity between these locations;

- Meeting and clustering, which will monitor anomalous tracks, watch boxes and trip wires where an analyst is monitoring check points and notify them when there is movement in that activity.

Nauman said there’s a potential for new problems associated with greater adoption of machine learning, which is alert overload. Depending on how good the algorithms are, they might cue false alarms leading the analyst to investigate “false” alerts.

“We went from object extraction to now, ‘Are these extractions real, how do we validate them?,’ maybe a secondary analytic to validate the detections,” he said.

Another issue surrounds the accuracy of such algorithms. In order for the machine to learn objects, it has to have images on file to learn from. For example, Deloitte trained its system to identify a bus based upon 20 images from Google. However, in a military context, analysts might not have 20 images of the object they want the machine to identify or if they do, they might not be very high resolution.

Even more complicated is teaching the machine to identify a specific tank or bus.

“Something like ‘not a person’ or ‘not a car’ but ‘your car’ is more complex,” Shaughnessy said. “You have to identify what are the unique feature of your car.” This can include certain markings or dents that are unique identifiers to the specific object versus all similar objects.

Mark Pomerleau is a reporter for C4ISRNET, covering information warfare and cyberspace.