There is no monopoly on autonomy. Figuring out how machines are going to interpret the world, and then act on that interpretation, is a military science as much as an academic and commercial one, and it should come as no surprise that arms manufacturers in Russia are starting to develop their own AI programs. Kalashnikov, an arms maker that’s part of the larger Rostec defense enterprise, announced last week that it is has developed some expertise in machine learning.

The announcement came during the “Digital Industry of Industrial Russia 2018” conference, reported TASS. Machine learning is a broad field around a relatively simple concept: training a program to sort inputs (say, parts of a picture that are street signs, parts of a picture that are not street signs), and then replicate that same learning on brand new inputs, based only on what it knows of prior examples. In a military context, that can mean everything from identifying specific types of vehicles to simple seeing what is and isn’t road. It’s hard to speak to any given development in the technology without seeing it in practice.

“There has not been such automation of Russian weapons before of the kind described here - ‘self-learning’ is a new concept that is a result of more advanced software and hardware developments dealing with AI,” says Samuel Bendett, a research analyst at the Center for Naval Analyses and Russia Studies Fellow at the American Foreign Policy Council. “Practical examples of such technologies - not just public announcements - are a true reflection of how far Russian R&D has progressed in this area, so the real proof would be public testing and evaluation of such weapons by the Russian Ministry of Defense.

To that end, Bendett says we might see demonstrations of machine learning in smart weapons modules advertised by Kalashnikov, though without an explicit announcement. Kalashnikov’s line range from small arms (including, yes, the AK series of rifles) up through precision weapons and unmanned vehicles.

“If Kalashnikov’s R&D has indeed progressed to the point where such ‘self-learning’ is ready for application, we can expect to see its tested on certain unmanned platforms,” says Bendett. “Kalashnikov was also working on combat modules such as armored turrets that could be armed with machine learning algorithms for better target authentication and destruction.”

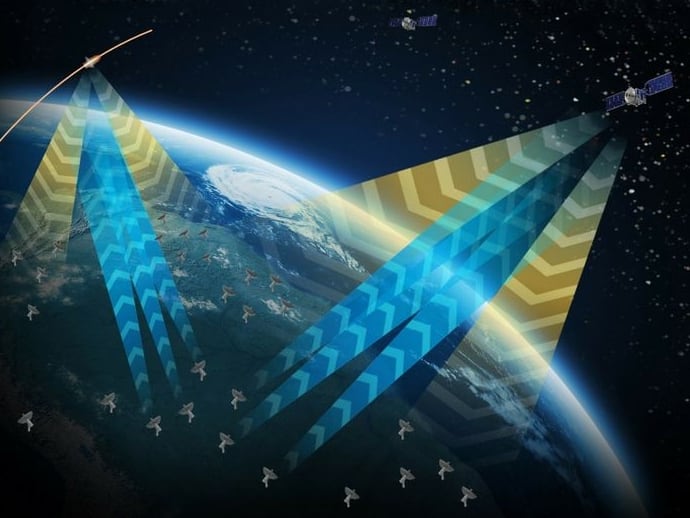

Another possibility for machine learning would be in the electronic warfare space. Given that most of EW is already about machines receiving and interpreting signals invisible to human perception, this is fertile ground for autonomous process.

“Other manufacturers working on equipping military systems with AI and machine learning are the manufacturers of electronic warfare technologies,” says Bendett, “For example, Russian military recently announced that its newest ‘Bylina’ EW platform will have elements of AI that would allow it to quickly identify potential threats and accurately target them.”

Autonomy in electronic warfare doesn’t raise the immediate visceral concern is people as it does with more kinetic, explosion-causing or bullet-firing weapons, but overall the principles of autonomous weapons are the same, and given how integral electronic systems are to almost everything in modern combat, it’d be a mistake to write off an autonomous electronic warfare machine as inherently less-than-lethal. Understanding the full picture of autonomous weapons means thinking beyond bullets, and it means thinking about international law, too. Last month, the European Union quietly removed a clause from a defense funding bill that would prevent that money going towards autonomous weapons, instead deferring to international law. International law remains unclear and unsettled on lethal autonomy, and in its absence militaries across the globe are going ahead and planning for some greater degree of autonomous technologies in the future.

“At this point, Russian position with respect to developing weapons armed with AI and machine learning is best exemplified by its official stance with respect to UN’s Lethal Autonomous Weapons Systems - there will be a man in the decision-making loop with respect to such AI-driven weapons,” says Bendett, “but nations (in this case, Russia) have the sovereign right in building and testing new technologies without international oversight or interference.”

Kelsey Atherton blogs about military technology for C4ISRNET, Fifth Domain, Defense News, and Military Times. He previously wrote for Popular Science, and also created, solicited, and edited content for a group blog on political science fiction and international security.