Thirty years ago, on September 23, 1992, the United States conducted its 1,054th nuclear weapons test.

When that test, named Divider, was detonated in the morning hours underground in the Nevada desert, no one knew it would be the last U.S. test for at least the next three decades. But by 1992, the Soviet Union had formally dissolved, and the United States government issued what was then seen as a short-term moratorium on testing that continues today.

This moratorium came with an unexpected benefit: no longer testing nuclear weapons ushered in a revolution in high-performance computing that has wide-ranging impacts on national and global security that few are aware of. The need to maintain our nuclear weapons in the absence of testing drove an unprecedented requirement for increased scientific computing power.

At Los Alamos National Laboratory in New Mexico, where the first atomic bomb was built, our primary mission is to maintain and verify the safety and reliability of the nuclear stockpile. We do this using nonnuclear and subcritical experiments coupled with advanced computer modeling and simulations to evaluate the health and extend the lifetimes of America’s nuclear weapons.

But as we all know, the geopolitical landscape has changed in recent years, and while nuclear threats still loom, a host of other emerging crises threaten our national security.

Pandemics, rising sea levels and eroding coastlines, natural disasters, cyberattacks, the spread of disinformation, energy shortages—we’ve seen first-hand how these events can destabilize nations, regions and the globe. At Los Alamos, we’re using high-performance computing that has been developed over the decades to simulate nuclear weapons’ explosions at extraordinarily high fidelity to address these threats.

When the Covid pandemic first took hold in 2020, our supercomputers were used to help forecast the spread of the disease, as well as model vaccine roll-out, the impact of variants and their spread, counties at high risk for vaccine hesitancy and impacts of various vaccine distribution scenarios. They also helped model the impact of public health orders, such as face-mask mandates, in stopping or slowing the spread.

That same computing power is being used to better understand DNA and the human body at fundamental levels. Researchers at Los Alamos created the largest simulation to date of an entire gene of DNA, a feat that required one billion atoms to model and will help researchers better understand and develop cures for diseases such as cancer.

What are supercomputers at Los Alamos used for?

The Laboratory is also using the power of secure, classified supercomputers to look at the national security implications of the changing climate. For years, our climate models have been used to predict the Earth’s responses to changes with ever-increasing resolution and accuracy. But the utility of our climate models to the national security community has been limited. That’s changing, given recent advances in modeling, increased resolution and computing power, and by pairing the climate models with infrastructure and impacts models.

Now we’re able to use our computing power to look at changes in the climate at extraordinarily high resolution in areas of interest. Because the work is on secure computers, we don’t reveal to potential adversaries exactly where (and why) we’re looking. In addition, using these supercomputers allows us to integrate classified data into the models that can further augment accuracy.

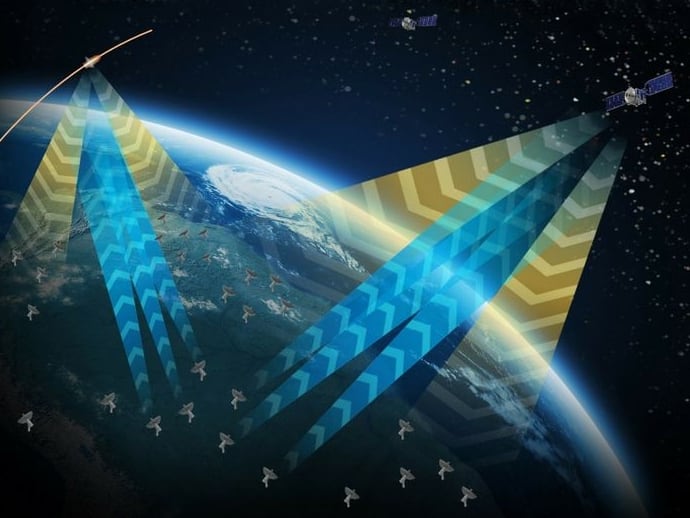

Supercomputers at Los Alamos are also being used for earthquake prediction, assessing the impacts of eroding coastlines, wildfire modeling and a host of other national security-related challenges. We’re also using supercomputers and data analytics to optimize our nonproliferation threat detection efforts.

Of course, our Laboratory isn’t alone in this effort. The other Department of Energy labs are using their supercomputing power to address similar and additional challenges. Likewise, private companies that are pushing the boundaries of computing also help advance national security-focused computing efforts, as does the work of our nation’s top universities. As the saying goes, a rising tide lifts all boats.

And we have the moratorium on nuclear weapons testing, at least in part, to thank. Little did we know, 30 years ago, how much we would benefit from the supercomputing revolution that followed. As a nation, continuing to invest in supercomputing ensures not only the safety and effectiveness of our nuclear stockpile, but advanced scientific exploration and discovery that benefits us all. Our national security depends on it.

Bob Webster is the deputy director for Weapons at Los Alamos National Laboratory. Nancy Jo Nicholas is the associate laboratory director for Global Security, also at Los Alamos.

Have an Opinion?

This article is an Op-Ed and the opinions expressed are those of the author. If you would like to respond, or have an editorial of your own you would like to submit, please email C4ISRNET Senior Managing Editor Cary O’Reilly.