The Department of Defense’s Chief Digital and Artificial Intelligence Office, established in February, is responsible for accelerating the adoption of emerging technologies such as artificial intelligence, with the goal of creating decision advantage from the boardroom to the battlefield.

And according to U.S. Deputy Secretary of Defense Kathleen Hicks, applying responsible AI across warfighting, enterprise support and business practices will be essential to ensure this advantage while also improving national security.

The RAI mission is to build robust, resilient and dependable AI systems while also becoming a leading advocate in the global dialogue on AI ethics. Integrating RAI in a thoughtful manner can make all the difference. Moreover, robotic process automation, which uses software robots that mimic human actions, must be an integral component in helping the DoD achieve its RAI mission.

To that end, RPA combined with RAI will free up warfighters from repetitive tasks so they can participate in professional development opportunities, maintain battlefield advantage against U.S. adversaries, support cybersecurity measures and simulate real-world scenarios for training. Additionally, combining RPA and RAI will build Pentagon personnel’s trust in and knowledge of future RAI processes.

How Automation Supports Responsible AI

One way to describe how RPA supports RAI is to view AI as the “brain” and RPA as the “hands” for warfighting capabilities. A brain can dream up concepts but can’t implement them without hands. At the same time, hands without a brain can’t oversee advanced processes. It’s when the two technologies are combined that advanced defense tasks will be successful.

By combining RPA and RAI, defense personnel will create intelligent automation (IA) that automates both administrative and warfighting tasks, such as processing personnel data, predicting mechanical failures in weapons platforms and performing complex analyses that support the warfighter and improve defense capabilities.

Moreover, deploying emerging technologies, such as RPA and RAI, with legacy systems can be a challenge for any government agency—especially an entity as large as the DoD. Defense officials who use RAI for decision-making will need to understand its limits and understand what it will do for them. Defense personnel must also know how to use RAI and how to run an algorithm, as they would run any battlefield technology or device.

By utilizing an AI-based RPA platform throughout RAI deployment, the Pentagon can achieve many of the goals outlined in its IT modernization plans, including:

— Accelerating overall RAI adoption by helping to solve the challenges of AI deployment and quickly move into production

— Mitigating security risks by applying machine learning (ML) models to problems with unpredictable outcomes

— Improving AI systems’ capacity for national and global collaboration, enabling the DoD to operate internally and with U.S. allies with more agility and eliminating organizational bottlenecks and work silos

— Ensuring that RAI capabilities align with operational and U.S. defense needs by allowing interaction with human employees for validation and correction

Examples of how the Defense Department can use RPA as it moves forward include:

— Minimizing unintended human bias in AI capabilities, including detecting and avoiding unintended consequences from defense systems, and the ability to deactivate systems that display unintended behavior, a key principle outlined in the DoD’s “5 Principles of Artificial Intelligence Ethics”

— Modernizing the data governance process by automating data protection and data leakage prevention

— Streamlining the requirements validation process by utilizing automated application lifecycle management

— Fostering a shared understanding of RAI among foreign governments by automating and standardizing many of the steps involved in RAI development and deployment

— Augmenting DoD’s most critical asset — humans — to offload manual/time intensive tasks to automations, freeing them up to focus on high value mission critical activities.

Automation, RAI and National Security: What’s Next?

The future of national security will be intelligent and automated. And as the DoD noted in its AI Strategy and Implementation Pathway Report, “RAI is a journey to trust.”

To that end, RAI should not be viewed as an endgame in which it is designated “responsible” and never revisited.

RAI requires continuous oversight. Defense agencies should move beyond traditional uses of automation and AI to include workforce, cultural, organizational and governance aspects. Additionally, RPA infused with RAI capabilities will allow the DoD to evaluate all defense processes, such as cybersecurity measures, using automation, AI and data.

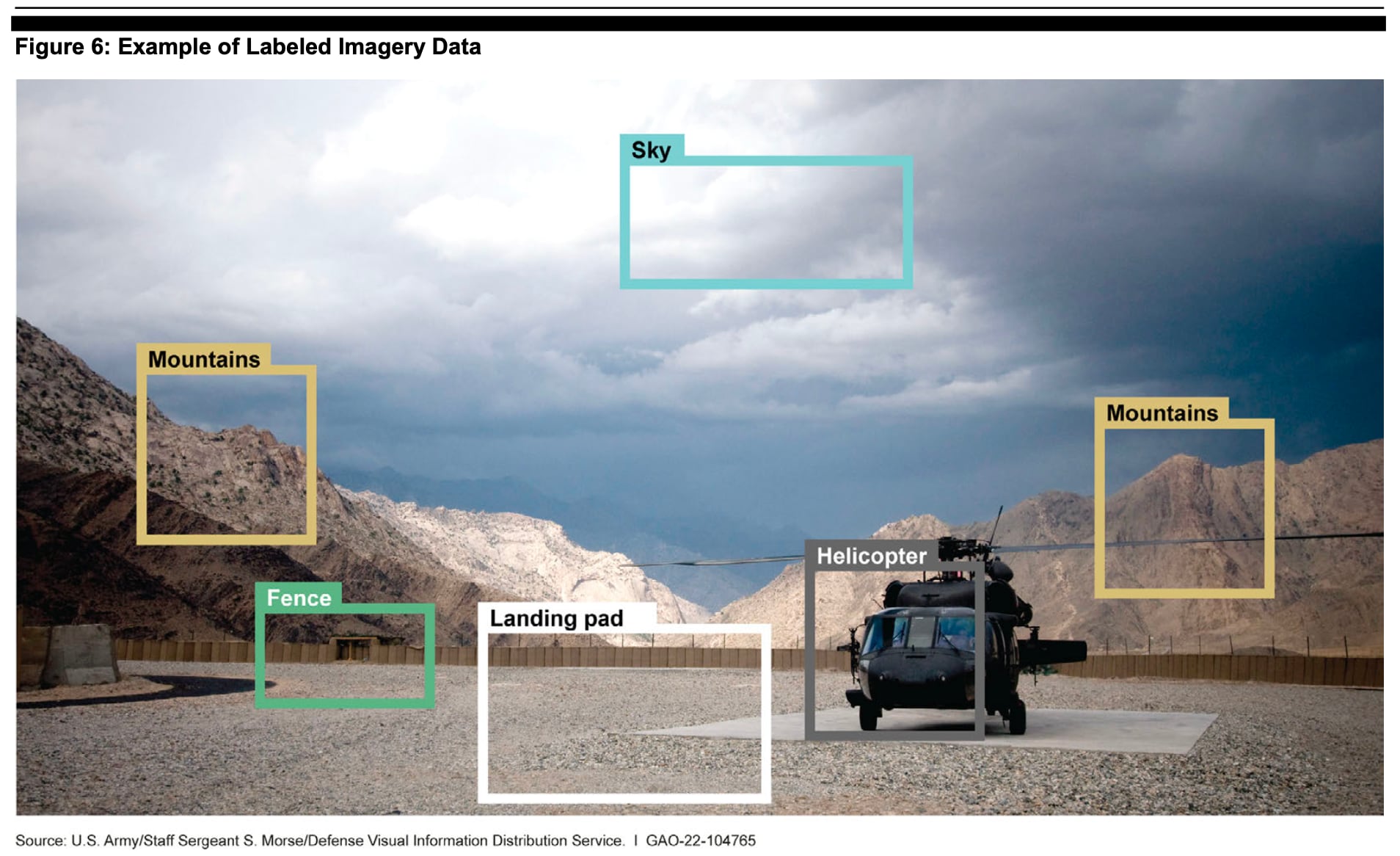

As the DoD moves forward with its IT modernization plans, defense officials should incorporate other emerging technology, such as machine learning. Currently, the DoD is applying machine learning in multiple areas—including full motion video—which allows the military to track high-value targets in real-time while reducing collateral damage, overhead satellite imagery and human language recognition.

Future uses for automation and RAI in national defense will also include contextual adaptations, such as computers adapting to new situations without being re-trained and their ability to inform the warfighter why certain decisions were made.

For example, the department might develop an AI-enabled, fully autonomous naval ship that uses algorithms to help it move in situations it was not trained for, such as inclement weather or operating in contested waters, according to Brian Mazanec, director of the Government Accountability Office’s Defense Capabilities and Management team.

And lastly, as AI systems start to process information similarly to humans, the ethical development and use of automation and RAI in combat and non-combat applications will be critical for national security now and in the future as global threats increase.

Jerrod McBride is regional vice president at UiPath, a global company that makes robotic process automation software.

Have an Opinion?

This article is an Op-Ed and the opinions expressed are those of the author. If you would like to respond, or have an editorial of your own you would like to submit, please email C4ISRNET Senior Managing Editor Cary O’Reilly.