Tactically efficient soldiers psychologically hedge against the consequences of war. Why? Because hedging is a behavior that’s trained over time to increase effectiveness in the face of extreme uncertainty on the battlefield. By maximizing the perception of normality when it comes to lethal scenarios , the soldiers’ physiological response to the unknown becomes more predictable.

Conventional training in support of that hedging centers on range qualifications, physical fitness, equipment-based instruction, and unit exercises. Whereas unconventional units may have additional access to sports psychologists and human performance experts. In both areas, the goal is to rewire residual biological synapses from the soldier’s pre-military life. That is, the end state is to create muscle memory around activities such as engaging an adversarial element or carrying a 60-pound ruck.

The process of cognitive re-engineering – or creating a “bulletproof mind” – is not only doctrinally cemented but also culturally engrained. For example, there is widespread organizational adoption around processes such as stress inoculation and aggression calibration targeted at conventional military parameters.

Yet, the conflict landscape is changing. Offsets are shifting from peripheral advantages such as weaponry (precision munitions, nuclear technology) to more anthropomorphic ones. In this new landscape, the offset isn’t a machine gun but a hyper-enabled operator. The problem is that the military hasn’t yet adjusted its training paradigm to accommodate modern offsets.

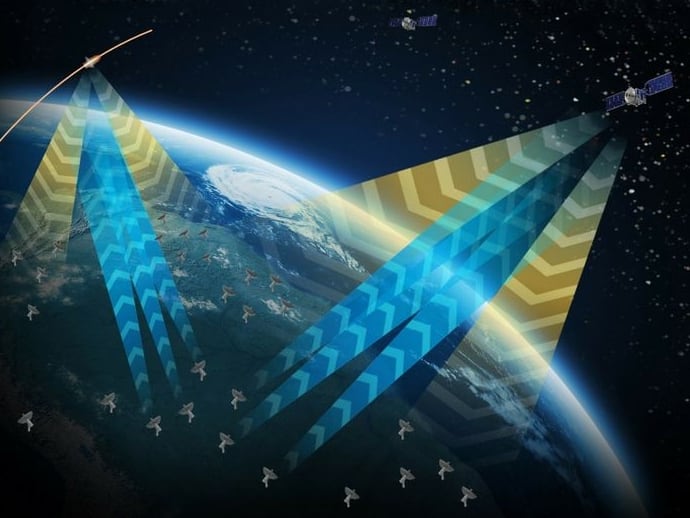

Take, for example, the introduction of artificial intelligence (AI) to the defense space. AI-enabled systems instigate a new era of teaming dynamics within the forces. Where “teaming” was previously thought of as human to human or even human to equipment, AI is an amalgamation of the two. It is neurological pathways architected into machines. Tactically, it’s forward units supported by autonomous vehicle convoys; analysts augmented by real time multi-intelligence fusion; and swarm technology that maximizes ground movement and efficiency.

It’s been six years since the Pentagon first standardized its reporting on autonomy and two years since it stood up a cross functional team dedicated to artificial intelligence. Moreover, the Defense Department’s newest AI strategy calls for “cultivating an AI workforce” and “investing in comprehensive AI training”. Nevertheless, there’s still no formalized training around how soldiers are expected to team with AI.

The lack of AI training and readiness is staggering when compared to other offsets and initiatives adopted by the military. Take, for example, the $572 million allocated to train soldiers in large-scale subterranean facilities. The justification? That the landscape of warfare was changing from asymmetric to “mega-cities.”

Another example is the widespread Glock adoption by Special Forces units around 2015. Prior to that move, the weapon was tested and trained. In fact, military spokesmen linked their decision to field the Glock to operator feedback, meaning that service members were given access to and training on the system ahead of any scaled acquisition. Yet, the average Soldier has had limited to no interaction with AI in their workspaces.

Arguably, artificial intelligence will underpin every aspect of the military fighting domain moving forward. All within the last year, the National Defense Strategy directed the services to use AI; Congress called it a “reasonable assumption” that AI will enhance ground force operational effectiveness; and the White House dedicated an entire executive order to outlining how government must “train a workforce capable of using AI in their occupations”.

Given government momentum toward AI acceleration, the services must ready their forces for AI teaming or risk missing the tipping point. Similarly, even though the “quick win” approach levied by the Pentagon’s AI outfit has achieved some tactical successes, there must be an equal (if not greater) emphasis around sustainable frameworks for integrating those quick wins across the enterprise.

The military can do this by pulling its cognitive engineering approach through from other training domains to the AI training domain. Given the uncertainty and fear around artificial intelligence fueled by popular culture, cognitive engineering in the AI space would give soldiers the same psychological hedging mechanisms that have been so successful for other training applications. By doing so, the Pentagon would cultivate the AI workforce its deemed necessary for future success.

The lack of trained AI experts in the military space necessitates partnering with industry leaders until the Defense Department’s own human capital is fully developed. Such a partnership would bring the robust expertise of scientists, cognitive engineers, and methodologists. Yet, the true value added will be in leveraging the very specific pool of highly cleared scientists, cognitive engineers, and methodologists with mission-specific experience. Such a group represents the unique bridge that AI training for military adopters must maintain – one where the complex nature of AI is translated effectively for non-technical soldier audiences.

The Pentagon cannot execute the executive’s National Defense Strategy or its own artificial intelligence strategy without forces ready to carry out AI policies, use AI-enabled systems, or make decisions on AI-dominated battlefields.

AI training can target all three features of future warfare by empowering the services with broad understanding, technical instruction, and teaming exercises. In an era were operational offsets are limited and where technology superiority is constantly challenged, the services cannot afford to neglect AI adoption.

Crosby is a senior lead scientist for Booz Allen Hamilton’s strategic innovation group. In her current role, Crosby employs artificial intelligence solutions across the Department of Defense. She holds a Ph.D. in decision sciences, has multiple operational deployments, and is a certified Army instructor.